Rectified Linear Units Artificial Neural Network . Web in a neural network, the activation function is responsible for transforming the summed weighted input. Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Web what is rectified linear unit (relu) training a deep neural network using relu. Best practice to use relu with he. Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning model and. Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for.

from lassehansen.me

Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning model and. Web in a neural network, the activation function is responsible for transforming the summed weighted input. Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Best practice to use relu with he. Web what is rectified linear unit (relu) training a deep neural network using relu.

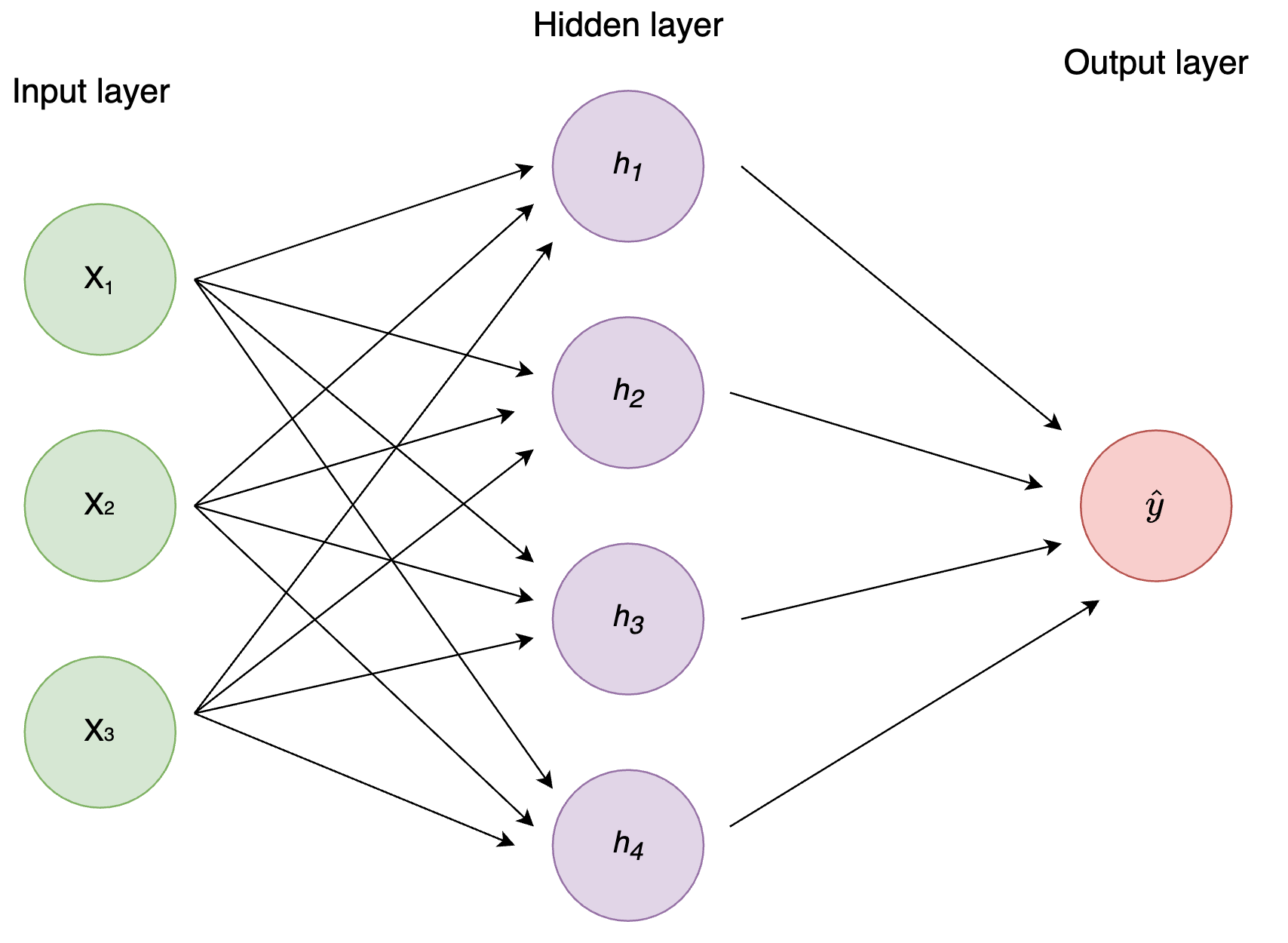

Neural Networks step by step Lasse Hansen

Rectified Linear Units Artificial Neural Network Best practice to use relu with he. Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning model and. Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Web what is rectified linear unit (relu) training a deep neural network using relu. Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Best practice to use relu with he. Web in a neural network, the activation function is responsible for transforming the summed weighted input.

From www.nbshare.io

Rectified Linear Unit For Artificial Neural Networks Part 1 Regression Rectified Linear Units Artificial Neural Network Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Web what is rectified linear unit (relu) training a deep neural network using relu. Web in a neural network, the activation function is responsible for. Rectified Linear Units Artificial Neural Network.

From www.researchgate.net

Artificial neural network trained to perform the visually cued Rectified Linear Units Artificial Neural Network Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Web what is rectified linear unit (relu) training a deep neural network using relu. Best practice to use relu with he. Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Web in a. Rectified Linear Units Artificial Neural Network.

From www.youtube.com

153 Artificial Neural Networks Explanation for those who understand Rectified Linear Units Artificial Neural Network Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning model and. Best practice to use relu with he. Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Web in a neural network, the activation function is responsible for transforming the summed. Rectified Linear Units Artificial Neural Network.

From www.researchgate.net

Figure A1. Simple neural network. ReLU rectified linear unit Rectified Linear Units Artificial Neural Network Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Web in a neural network, the activation function is responsible for transforming the summed weighted input. Web what is rectified linear unit (relu) training a deep neural network using relu. Web the rectified linear unit (relu) has emerged as a cornerstone in the. Rectified Linear Units Artificial Neural Network.

From www.researchgate.net

Artificial neural network architecture. ReLU Rectified Linear Unit Rectified Linear Units Artificial Neural Network Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Web what is rectified linear unit (relu) training a deep neural network using relu. Web the rectified linear unit (relu) function is a cornerstone. Rectified Linear Units Artificial Neural Network.

From iq.opengenus.org

Linear Activation Function Rectified Linear Units Artificial Neural Network Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Web what is rectified linear unit (relu) training a deep neural network using relu. Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Web in a neural network, the activation function is responsible for. Rectified Linear Units Artificial Neural Network.

From www.researchgate.net

A small autoencoder neural network model with 1 input layer (red), 3 Rectified Linear Units Artificial Neural Network Web what is rectified linear unit (relu) training a deep neural network using relu. Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Best practice to use relu with he. Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning model and.. Rectified Linear Units Artificial Neural Network.

From www.researchgate.net

(PDF) Understanding Deep Neural Networks with Rectified Linear Units Rectified Linear Units Artificial Neural Network Web what is rectified linear unit (relu) training a deep neural network using relu. Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning model and. Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Best practice to use relu with he. Web. Rectified Linear Units Artificial Neural Network.

From www.youtube.com

Rectified Linear Unit(relu) Activation functions YouTube Rectified Linear Units Artificial Neural Network Web what is rectified linear unit (relu) training a deep neural network using relu. Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Web in a neural network, the activation function is responsible for transforming the summed weighted input. Web the rectified linear unit (relu) or rectifier activation function introduces the property. Rectified Linear Units Artificial Neural Network.

From wikidocs.net

01. Artificial neural networks Deep Learning Bible C. Machine Rectified Linear Units Artificial Neural Network Best practice to use relu with he. Web in a neural network, the activation function is responsible for transforming the summed weighted input. Web what is rectified linear unit (relu) training a deep neural network using relu. Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Web the rectified linear unit. Rectified Linear Units Artificial Neural Network.

From www.researchgate.net

Network structure of artificial neural network using rectified linear Rectified Linear Units Artificial Neural Network Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning model and. Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Best practice to use relu with he. Web relu, or rectified linear unit, represents a function that has transformed the landscape. Rectified Linear Units Artificial Neural Network.

From www.researchgate.net

(PDF) Application of a Rectified Linear Unit (ReLU) Based Artificial Rectified Linear Units Artificial Neural Network Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Best practice to use relu with he. Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning model and. Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple,. Rectified Linear Units Artificial Neural Network.

From www.researchgate.net

Structure of the final artificial neural network (ANN) model. The Rectified Linear Units Artificial Neural Network Web what is rectified linear unit (relu) training a deep neural network using relu. Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Web the rectified linear unit (relu) or rectifier activation function introduces. Rectified Linear Units Artificial Neural Network.

From www.researchgate.net

The conceptual paradigm of the rectified artificial neural network Rectified Linear Units Artificial Neural Network Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Best practice to use relu with he.. Rectified Linear Units Artificial Neural Network.

From www.wikitechy.com

Artificial Neural Network Tutorial What is Artificial Neural Network Rectified Linear Units Artificial Neural Network Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning model and. Best practice to use relu with he. Web the rectified linear unit (relu) has emerged as a cornerstone in the architecture of modern neural networks,. Web relu, or rectified linear unit, represents a function that has transformed the landscape. Rectified Linear Units Artificial Neural Network.

From www.vrogue.co

Neural Network Tutorial Artificial Intelligence Tutorial Vrogue Rectified Linear Units Artificial Neural Network Web the rectified linear unit (relu) function is a cornerstone activation function, enabling simple, neural efficiency for. Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Best practice to use relu with he. Web what is rectified linear unit (relu) training a deep neural network using relu. Web the rectified linear. Rectified Linear Units Artificial Neural Network.

From towardsdatascience.com

Understanding Neural Networks What, How and Why? Towards Data Science Rectified Linear Units Artificial Neural Network Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Web what is rectified linear unit (relu) training a deep neural network using relu. Best practice to use relu with he. Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning model and.. Rectified Linear Units Artificial Neural Network.

From www.researchgate.net

Structure of our artificial neural network (ANN) model. Denseblock is Rectified Linear Units Artificial Neural Network Web in a neural network, the activation function is responsible for transforming the summed weighted input. Best practice to use relu with he. Web relu, or rectified linear unit, represents a function that has transformed the landscape of neural network designs. Web the rectified linear unit (relu) or rectifier activation function introduces the property of nonlinearity to a deep learning. Rectified Linear Units Artificial Neural Network.